Somewhen ago (it’s always somewhen ago; most of the world never seems to catch up with what’s already happened!:-( I started dabbling with the OpenCorporates API to generate co-director corporate maps that showed companies linked by multiple directors. It must have been a bad idea because no-one could see any point in it, not even interestingness… (Which suggests to me that boards made up of directors are similarly meaningless? In which case, how are companies supposed to hold themselves to account?)

I tend to disagree. If I hadn’t been looking at connected companies around food processing firms, I would never have learned that one that meat processors cope with animal fat waste is to feed it into the biodiesel raw material supply chain.

Anyway, if we ever get to see a beneficial ownership register, a similar approach should work to generate maps showing how companies sharing beneficial owners are linked. (The same approach also drives my emergent social positioning Twitter maps and the Wikipedia semantic maps I posted about again recently.)

As a possible precursor to that, I thought I’d try to reimplement the code (in part to see if a better approach came to mind) using data grabbed directly from Companies House via their API. I’d already started dabbling with the API (Chat Sketches with the Companies House API) so it didn’t take much more to get a grapher going…

But first, I realise in that earlier post I’d missed the function for actually calling the API – so here it is:

import urllib2, base64, json

from urllib import urlencode

from time import sleep

def url_nice_req(url,t=300):

try:

return urllib2.urlopen(url)

except HTTPError, e:

if e.code == 429:

print("Overloaded API, resting for a bit...")

time.sleep(t)

return url_req(url)

#Inspired by http://stackoverflow.com/a/2955687/454773

def ch_request(CH_API_TOKEN,url,args=None):

if args is not None:

url='{}?{}'.format(url,urlencode(args))

request = urllib2.Request(url)

# You need the replace to handle encodestring adding a trailing newline

# (https://docs.python.org/2/library/base64.html#base64.encodestring)

base64string = base64.encodestring('%s:' % (CH_API_TOKEN)).replace('\n', '')

request.add_header("Authorization", "Basic %s" % base64string)

result = url_nice_req(request)

return json.loads(result.read())

CH_API_TOKEN='YOUR_API_TOKEN_FROM_COMPANIES_HOUSE'

In the original implementation, I stored the incremental search results in a dict; in the reimplementation, I thought I’d make use of a small SQLite database.

import sqlite3

db=None

memDB=":memory:"

tmpDB='example.db'

if db in locals():

db.close()

db = sqlite3.connect(tmpDB)

c = db.cursor()

for drop in ['directorslite','companieslite','codirs','coredirs','singlecos']:

c.execute('''drop table if exists {}'''.format(drop))

c.execute('''create table directorslite

(dirnum text primary key,

dirdob integer,

dirname text)''')

c.execute('''create table companieslite

(conum text primary key,

costatus text,

coname text)''')

c.execute('''create table codirs

(conum text,

dirnum text,

typ text,

status text)''')

c.execute('''create table coredirs

(dirnum text)''')

c.execute('''create table singlecos

(conum text,

coname text)''')

cosdone=[]

cosparsed=[]

dirsdone=[]

dirsparsed=[]

codirsdone=[]

The code itself runs in two passes. The first pass builds up a seed set of directors from a single company or set of companies using a simple harvester:

def updateOnCo(seed,typ='current',role='director'):

print('harvesting {}'.format(seed))

#apiNice()

o=ch_getCompanyOfficers(seed,typ=typ,role=role)['items']

x=[{'dirnum':p['links']['officer']['appointments'].strip('/').split('/')[1],

'dirdob':p['date_of_birth']['year'] if 'date_of_birth' in p else None,

'dirname':p['name']} for p in o]

z=[]

for y in x:

if y['dirnum'] not in dirsdone:

z.append(y)

dirsdone.append(y['dirnum'])

if isinstance(z, dict): z=[z]

print('Adding {} directors'.format(len(z)))

c.executemany('INSERT INTO directorslite (dirnum, dirdob,dirname)'

'VALUES (:dirnum,:dirdob,:dirname)', z)

for oo in [i for i in o if i['links']['officer']['appointments'].strip('/').split('/')[1] not in dirsparsed]:

oid=oo['links']['officer']['appointments'].strip('/').split('/')[1]

print('New director: {}'.format(oid))

#apiNice()

ooo=ch_getAppointments(oid,typ=typ,role=role)

#apiNice()

#Play nice with the api

sleep(0.5)

#add company details

x=[{'conum':p['appointed_to']['company_number'],

'costatus':p['appointed_to']['company_status'] if 'company_status' in p['appointed_to'] else '',

'coname':p['appointed_to']['company_name'] if 'company_name' in p['appointed_to'] else ''} for p in ooo['items']]

z=[]

for y in x:

if y['conum'] not in cosdone:

z.append(y)

cosdone.append(y['conum'])

if isinstance(z, dict): z=[z]

print('Adding {} companies'.format(len(z)))

c.executemany('INSERT INTO companieslite (conum, costatus,coname)'

'VALUES (:conum,:costatus,:coname)', z)

for i in x:cosdone.append(i['conum'])

#add company director links

dirnum=ooo['links']['self'].strip('/').split('/')[1]

x=[{'conum':p['appointed_to']['company_number'],'dirnum':dirnum,

'typ':'current','status':'director'} for p in ooo['items']]

c.executemany('INSERT INTO codirs (conum, dirnum,typ,status)'

'VALUES (:conum,:dirnum,:typ,:status)', x)

print('Adding {} company-directorships'.format(len(x)))

dirsparsed.append(oid)

cosparsed.append(seed)

The set of seed companies may be companies associated with one or more specified seed directors, for example:

def dirCoSeeds(dirseeds,typ='all',role='all'):

''' Find companies associated with dirseeds '''

coseeds=[]

for d in dirseeds:

for c in ch_getAppointments(d,typ=typ,role=role)['items']:

coseeds.append(c['appointed_to']['company_number'])

return coseeds

dirseeds=[]

for d in ch_searchOfficers('Bernard Ecclestone',n=10,exact='forename')['items']:

dirseeds.append(d['links']['self'])

coseeds=dirCoSeeds(dirseeds,typ='current',role='director')

Then I call a first pass of the co-directed companies search with the set of company seeds:

typ='current'

#Need to handle director or LLP Designated Member

role='all'

for seed in coseeds:

updateOnCo(seed,typ=typ,role=role)

c.executemany('INSERT INTO coredirs (dirnum) VALUES (?)', [[d] for d in dirsparsed])

seeder_roles=['Finance Director']

#for dirs in seeded_cos, if dir_role is in seeder_roles then do a second seeding based on their companies

#TO DO

depth=0

Then we go for a crawl for as many steps as required… The approach I’ve taken here is to search through the current database to find the companies heuristically defined as codirected, and then feed these back into the harvester.

seeder=True

oneDirSeed=True

#typ='current'

#role='director'

maxdepth=3

#relaxed=0

while depth<maxdepth:

print('---------------\nFilling out level - {}...'.format(depth))

if seeder and depth==0:

#Another policy would be dive on all companies associated w/ dirs of seed

#In which case set the above test to depth==0

tofetch=[u[0] for u in c.execute(''' SELECT DISTINCT conum from codirs''')]

else:

duals=c.execute('''SELECT cd1.conum as c1,cd2.conum as c2, count(*) FROM codirs AS cd1

LEFT JOIN codirs AS cd2

ON cd1.dirnum = cd2.dirnum AND cd1.dirnum

WHERE cd1.conum < cd2.conum GROUP BY c1,c2 HAVING COUNT(*)>1

''')

tofetch=[x for t in duals for x in t[:2]]

#The above has some issues. eg only 1 director is required, and secretary IDs are unique to company

#Maybe need to change logic so if two directors OR company just has one director?

#if relaxed>0:

# print('Being relaxed {} at depth {}...'.format(relaxed,depth))

# duals=c.execute('''SELECT cd.conum as c1,cl.coname as cn, count(*) FROM codirs as cd JOIN companieslite as cl

# WHERE cd.conum= cl.conum GROUP BY c1,cn HAVING COUNT(*)=1

# ''')

# tofetch=tofetch+[x[0] for x in duals]

# relaxed=relaxed-1

if depth==0 and oneDirSeed:

#add in companies with a single director first time round

sco=[]

for u in c.execute('''SELECT DISTINCT cd.conum, cl.coname FROM codirs cd JOIN companieslite cl ON

cd.conum=cl.conum'''):

#apiNice()

o=ch_getCompanyOfficers(u[0],typ=typ,role=role)

if len(o['items'])==1 or u[0]in coseeds:

sco.append({'conum':u[0],'coname':u[1]})

tofetch.append(u[0])

c.executemany('INSERT INTO singlecos (conum,coname) VALUES (:conum,:coname)', sco)

#TO DO: Another stategy might to to try to find the Finance Director or other names role and seed from them?

#Get undone companies

print('To fetch: ',[u for u in tofetch if u not in cosparsed])

for u in [x for x in tofetch if x not in cosparsed]:

updateOnCo(u,typ=typ,role=role)

cosparsed.append(u)

#play nice

#apiNice()

depth=depth+1

#Parse companies

To visualise the data, I opted for Gephi, which meant having to export the data. I started off with a simple CSV edgelist exporter:

data=c.execute('''SELECT cl1.coname as Source,cl2.coname as Target, count(*) FROM codirs AS cd1

LEFT JOIN codirs AS cd2 JOIN companieslite as cl1 JOIN companieslite as cl2

ON cd1.dirnum = cd2.dirnum and cd1.conum=cl1.conum and cd2.conum=cl2.conum

WHERE cd1.conum 1''')

import csv

with open('output1.csv', 'wb') as f:

writer = csv.writer(f)

writer.writerow(['Source', 'Target'])

writer.writerows(data)

data= c.execute('''SELECT cl1.coname as c1,cl2.coname as c2 FROM codirs AS cd1

LEFT JOIN codirs AS cd2 JOIN singlecos as cl1 JOIN singlecos as cl2

ON cd1.dirnum = cd2.dirnum and cd1.conum=cl1.conum and cd2.conum=cl2.conum

WHERE cd1.conum < cd2.conum''')

with open('output1.csv', 'ab') as f:

writer = csv.writer(f)

writer.writerows(data)

but soon changed that to a proper graph file export, based on a graph built around the codirected companies using the networkx package:

import networkx as nx

G=nx.Graph()

data=c.execute('''SELECT cl.conum as cid, cl.coname as cn, dl.dirnum as did, dl.dirname as dn

FROM codirs AS cd JOIN companieslite as cl JOIN directorslite as dl ON cd.dirnum = dl.dirnum and cd.conum=cl.conum ''')

for d in data:

G.add_node(d[0], Label=d[1])

G.add_node(d[2], Label=d[3])

G.add_edge(d[0],d[2])

nx.write_gexf(G, "test.gexf")

I then load the graph file into Gephi to visualise the data.

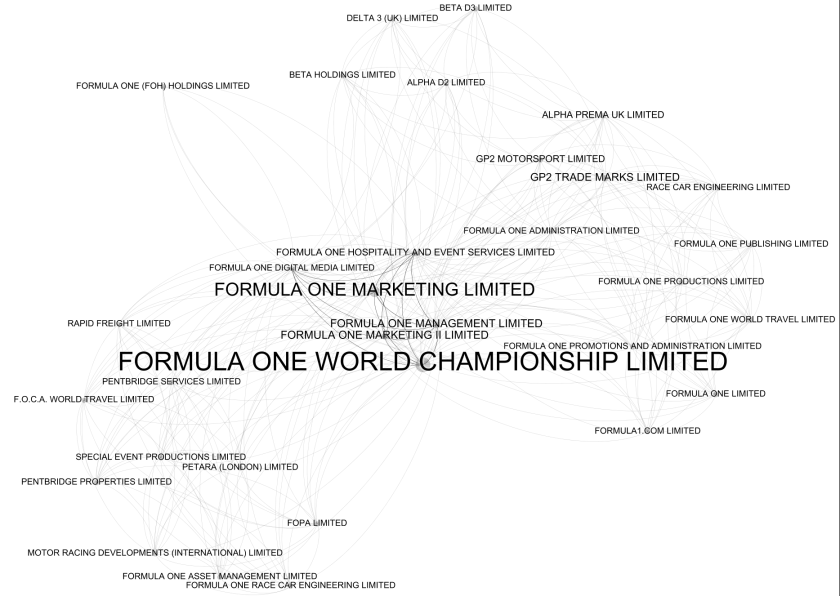

Here’s an example of the sort of thing we can get out for a search seeded on companies associated with the Bernie Ecclestone who directs at least one F1 related company:

On the to do list is to automate this a little bit more by adding some netwrok statistics, and possibly a first pass layout, in the networkx step.

In terms of time required to collect the data, the ,a href=”https://developer.companieshouse.gov.uk/api/docs/index/gettingStarted/rateLimiting.html”>Companies House API is rate limited to allow 600 requests within a five minute period. Many company networks can be mapped within the 600 call limit, but even for larger networks, the trawl doesn’t take too long even if two or three rest periods are required.

Is anyone doing this sort of thing in R ???

I haven’t seen an R package for it, but from a quick test I can a response to a JSON returning query using:

#—

CH_API=’MY_API_TOKEN’

API_PATH=’https://api.companieshouse.gov.uk’

library(httr)

n=50

si=”

q=’Bernard Ecclestone’

URL=paste(API_PATH,’/search/officers?q=’,URLencode(q),’&items_per_page=’,n,’&start_index’,si,sep=”)

req = GET(URL,authenticate(CH_API_TOKEN, “”, type = “basic”))

content(req)

#—

then we can peek into the response using something like:

#—-

c=content(req)

items=c$items

for (i in 1:length(items)){

print(paste(items[[i]]$title,items[[i]]$snippet,sep=’::’))

}

#—-