Earlier this year I started trying to pull together some of my #f1datajunkie R-related ramblings together in a book form. The project stalled, but to try to reboot it I’ve started publishing it as a living book over on Leanpub. Several of the chapters are incomplete – with TO DO items sketched in, others are still unpublished. The beauty of the Leanpub model is that if you buy a copy, you continue to get access to all future updated versions of the book. (And my idea is that by getting the book out there as it is, I’ll feel as if there’s more (social) pressure on actually trying to keep up with it…)

I’ll be posting more details about how the Leanpub process works (for me at least) in the next week or two, but for now, here’s a link to the book: Wrangling F1 Data With R: A Data Junkie’s Guide.

Here’s the table of contents so far:

- Foreword

- A Note on the Data Sources

- Introduction

- Preamble

- What are we trying to do with the data?

- Choosing the tools

- The Data Sources

- Getting the Data into RStudio

- Example F1 Stats Sites

- How to Use This Book

- The Rest of This Book…

- An Introduction to RStudio and R dataframes

- Getting Started with RStudio

- Getting Started with R

- Summary

- Getting the data from the Ergast Motor Racing Database API

- Accessing Data from the ergast API

- Summary

- Getting the data from the Ergast Motor Racing Database Download

- Accessing SQLite from R

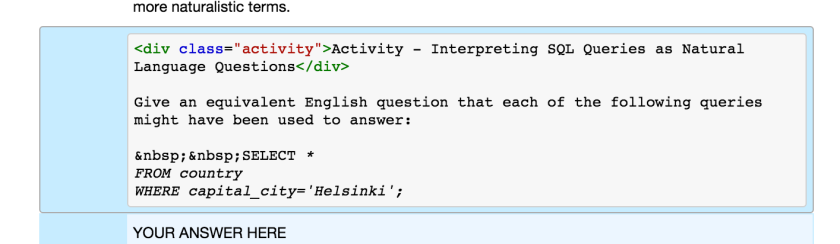

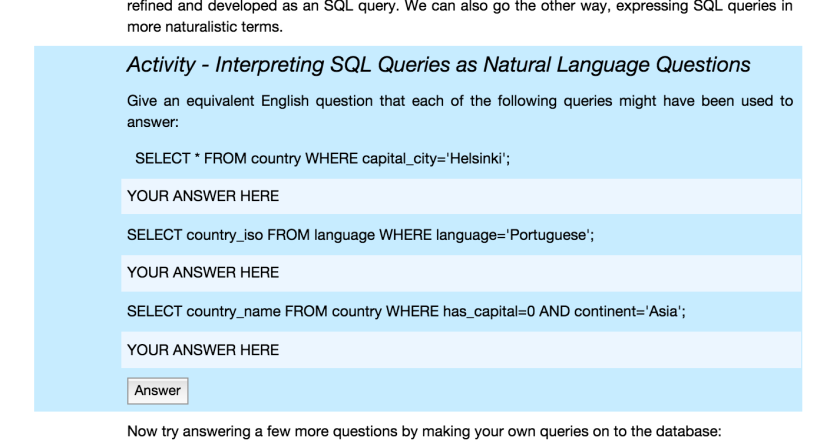

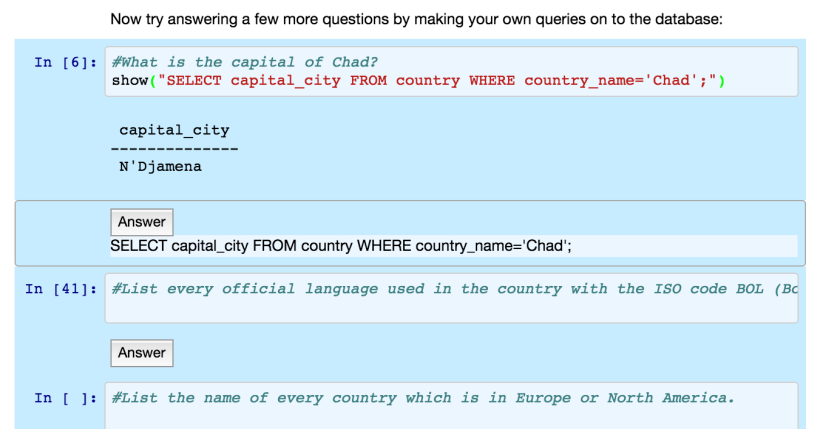

- Asking Questions of the ergast Data

- Summary

- Exercises and TO DO

- Data Scraped from the F1 Website

- Problems with the Formula One Data

- How to use the FormulaOne.com alongside the ergast data

- Reviewing the Practice Sessions

- The Weekend Starts Here

- Practice Session Data from the FIA

- Sector Times

- FIA Media Centre Timing Sheets

- A Quick Look at Qualifying

- Qualifying Session Position Summary Chart

- Another Look at the Session Tables

- Ultimate Lap Positions

- Lapcharts

- Annotated Lapcharts

- Race History Charts

- The Simple Laptime Chart

- Accumulated Laptimes

- Gap to Leader Charts

- The Lapalyzer Session Gap

- Eventually: The Race History Chart

- Pit Stop Analysis

- Pit Stop Data

- Total pit time per race

- Pit Stops Over Time

- Estimating pit loss time

- Tyre Change Data

- Career Trajectory

- The Effect of Age on Performance

- Statistical Models of Career Trajectories

- The Age-Productivity Gradient

- Summary

- Streakiness

- Spotting Runs

- Generating Streak Reports

- Streak Maps

- Team Streaks

- Time to N’th Win

- TO DO

- Summary

- Conclusion

- Appendix One – Scraping formula1.com Timing Data

- Appendix Two – FIA Timing Sheets

- Downloading the FIA timing sheets for a particular race

- Appendix – Converting the ergast Database to SQLite

If you think you deserve a free copy, let me know… ;-)