A recent provisional data release from the Ministry of Justice contains sentencing data from English(?) courts, at the offence level, for the period July 2010-June 2011: “Published for the first time every sentence handed down at each court in the country between July 2010 and June 2011, along with the age and ethnicity of each offender.” Criminal Justice Statistics in England and Wales [data]

In this post, I’ll describe a couple of ways of working with the data to produce some simple graphical summaries of the data using Google Fusion Tables and R…

…but first, a couple of observations:

– the web page subheading is “Quarterly update of statistics on criminal offences dealt with by the criminal justice system in England and Wales.”, but the sidebar includes the link to the 12 month set of sentencing data;

– the URL of the sentencing data is http://www.justice.gov.uk/downloads/publications/statistics-and-data/criminal-justice-stats/recordlevel.zip, which does not contain a time reference, although the data is time bound. What URL will be used if data for the period 7/11-6/12 is released in the same way next year?

The data is presented as a zipped CSV file, 5.4MB in the zipped form, and 134.1MB in the unzipped form.

The unzipped CSV file is too large to upload to a Google Spreadsheet or a Google Fusion Table, which are two of the tools I use for treating large CSV files as a database, so here are a couple of ways of getting in to the data using tools I have to hand…

Unix Command Line Tools

I’m on a Mac, so like Linux users I have ready access to a Console and several common unix commandline tools that are ideally suited to wrangling text files (on Windows, I suspect you need to install something like Cygwin; a search for windows unix utilities should turn up other alternatives too).

In Playing With Large (ish) CSV Files, and Using Them as a Database from the Command Line: EDINA OpenURL Logs and Postcards from a Text Processing Excursion I give a couple of examples of how to get started with some of the Unix utilities, which we can crib from in this case. So for example, after unzipping the recordlevel.csv document I can look at the first 10 rows by opening a console window, changing directory to the directory the file is in, and running the following command:

head recordlevel.csv

Or I can pull out rows that contain a reference to the Isle of Wight using something like this command:

grep -i wight recordlevel.csv > recordsContainingWight.csv

(The -i reads: “ignoring case”; grep is a command that identifies rows contain the search term (wight in this case). The > recordsContainingWight.csv says “send the result to the file recordsContainingWight.csv” )

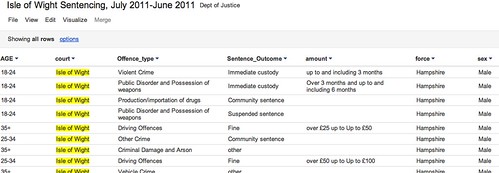

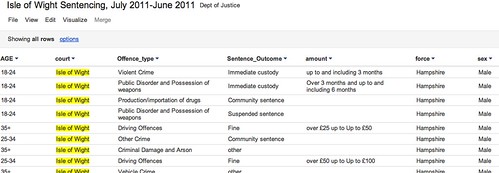

Having extracted rows that contain a reference to the Isle of Wight into a new file, I can upload this smaller file to a Google Spreadsheet, or as Google Fusion Table such as this one: Isle of Wight Sentencing Fusion table.

Once in the fusion table, we can start to explore the data. So for example, we can aggregate the data around different values in a given column and then visualise the result (aggregate and filter options are available from the View menu; visualisation types are available from the Visualize menu):

We can also introduce filters to allow use to explore subsets of the data. For example, here are the offences committed by females aged 35+:

Looking at data from a single court may be of passing local interest, but the real data journalism is more likely to be focussed around finding mismatches between sentencing behaviour across different courts. (Hmm, unless we can get data on who passed sentences at a local level, and look to see if there are differences there?) That said, at a local level we could try to look for outliers maybe? As far as making comparisons go, we do have Court and Force columns, so it would be possible to compare Force against force and within a Force area, Court with Court?

R/RStudio

If you really want to start working the data, then R may be the way to go… I use RStudio to work with R, so it’s a simple matter to just import the whole of the reportlevel.csv dataset.

Once the data is loaded in, I can use a regular expression to pull out the subset of the data corresponding once again to sentencing on the Isle of Wight (i apply the regular expression to the contents of the court column:

recordlevel <- read.csv("~/data/recordlevel.csv")

iw=subset(recordlevel,grepl("wight",court,ignore.case=TRUE))

We can then start to produce simple statistical charts based on the data. For example, a bar plot of the sentencing numbers by age group:

age=table(iw$AGE)

barplot(age, main="IW: Sentencing by Age", xlab="Age Range")

We can also start to look at combinations of factors. For example, how do offence types vary with age?

ageOffence=table(iw$AGE, iw$Offence_type)

barplot(ageOffence,beside=T,las=3,cex.names=0.5,main="Isle of Wight Sentences", xlab=NULL, legend = rownames(ageOffence))

If we remove the beside=T argument, we can produce a stacked bar chart:

barplot(ageOffence,las=3,cex.names=0.5,main="Isle of Wight Sentences", xlab=NULL, legend = rownames(ageOffence))

If we import the ggplot2 library, we have even more flexibility over the presentation of the graph, as well as what we can do with this sort of chart type. So for example, here’s a simple plot of the number of offences per offence type:

require(ggplot2)

#You may need to install ggplot2 as a library if it isn't already installed

ggplot(iw, aes(factor(Offence_type)))+ geom_bar() + opts(axis.text.x=theme_text(angle=-90))+xlab('Offence Type')

Alternatively, we can break down offence types by age:

ggplot(iw, aes(AGE))+ geom_bar() +facet_wrap(~Offence_type)

We can bring a bit of colour into a stacked plot that also displays the gender split on each offence:

ggplot(iw, aes(AGE,fill=sex))+geom_bar() +facet_wrap(~Offence_type)

One thing I’m not sure how to do is rip the data apart in a ggplot context so that we can display percentage breakdowns, so we could compare the percentage breakdown by offence type on sentences awarded to males vs. females, for example? If you do know how to do that, please post a comment below ;-)

PS HEre’s an easy way of getting started with ggplot… use the online hosted version at http://www.yeroon.net/ggplot2/ using this data set: wightCrimRecords.csv; download the file to your computer then upload it as shown below:

PPS I got a little way towards identifying percentage breakdowns using a crib from here. The following command:

iwp=tapply(iw$Offence_type,iw$sex,function(x){prop.table(table(x))})

generates a (multidimensional) array for the responseVar (Offence) about the groupVar (sex). I don’t know how to generate a single data frame from this, but we can create separate ones for each sex as follows:

iwpMale=data.frame(iwp['Male'])

iwpFemale=data.frame(iwp['Female'])

We can then plot these percentages using constructions of the form:

ggplot(iwp2)+geom_bar(aes(x=Male.x,y=Male.Freq))

What I haven’t worked out how to do is elegantly map from the multidimensional array to a single data.frame? If you know how, please add a comment below…(I also posted a question on Cross Validated, the stats bit of Stack Exchange…)