With yet more scandal about horsemeat in the UK foodchain, (going against the “English taboo” for eating horsemeat, as French reports describe it), I thought I’d do a couple of quick sketches around companies mentioned in the scandal (I used this How the horsemeat scandal unfolded timeline to get a lead on the names of some of the companies involved).

Using the scraper I described in Corporate Sprawl Sketch Trawls Using OpenCorporates, I popped in a few different seed companies and pulled back lists of companies that shared two or more co-directors with the seed company (call these the “direct set” companies) as well as companies that shared two or more directors with directors of the direct set companies. We can look at the graph structure of these companies and their directors to get a feel for the directorial organisation of these companies, or we can just look at the list of companies for an insight into the companies that directors related to companies related to directors of the seed company that are engaged in. (It gets a bit fiddly trying to describe it in words – you have to get a feel for the graph;-)

Anyway, via OpenCorporates and ScraperWiki, here’s a list of:

- companies associated with Findus Limited;

- companies associated with a couple of subsidiaries of ABP Foods (I seeded my search using W.A.Turner Ltd and Freshlink Foods, identified from the list of ABP’s convenience foods companies).

(Note that these lists are not necessarily complete…)

(Hmm.. thinks.. maybe I should run the companies through my trademark gallery generator to see what well known food brand logos turn up?!)

Looking through the ABP Foods related companies list, I noticed a lot of companies associated to oil. A bit of casual searching turned up Agri, a subsidiary of ABP Food Group that among other things produces biodiesel from waste cooking oil. Good stuff… I know various folk try out converting their cars to run on oil that’s fallen out the back of a chip shop, so it’s encouraging, I guess, that waste oil is being used at more industrial scale to produce biodiesel, rather than growing crops specifically for that purpose.

As you do, I had a bit more of a search around around biodiesel, and came across this PDF from Biofuel Production From Animal Fats. Among other things, I learn that “Almost 90% of 2008 Canadian biodiesel was produced from animal fats and greases”. Blimey…

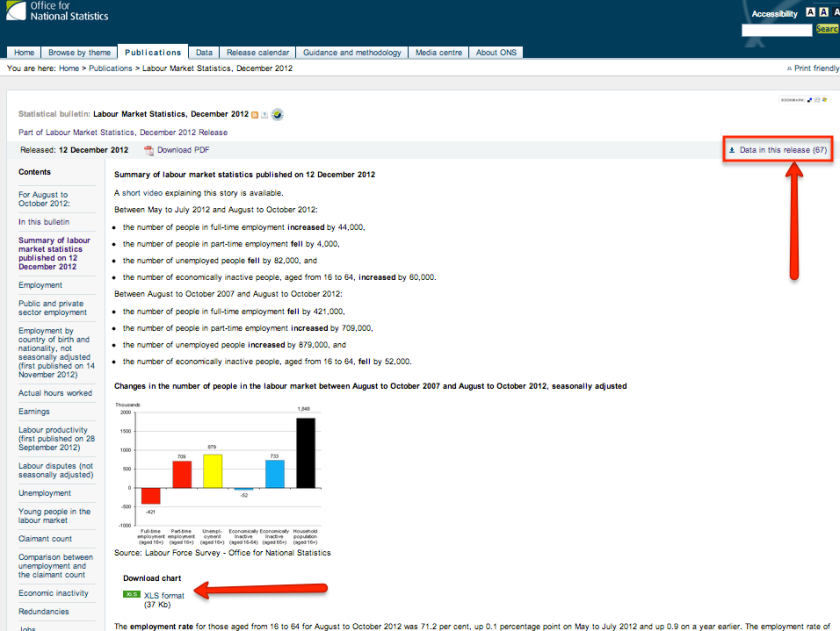

There was also this slide that gives a broad bit of context:

A bit more digging, and I came across some Environment Agency guidelines on waste and biodiesel, which summarise the “inputs” as follows:

2.3 Input materials

2.3.1 Known and defined input materials must be used. These are waste cooking oil, rendered animal fat (tallow) and chemical catalysts (typically sodium hydroxide or potassium hydroxide).

2.3.2 Wastes that are suitable for biodiesel production are classified under the following European Waste Catalogue (EWC) codes. Please note that not all wastes classified under these codes may be suitable for processing:

– 20.01.25: waste cooking oil originating in restaurants, catering facilities and kitchens (municipal wastes (household waste and similar commercial, industrial and institutional wastes) including separately collected fractions: edible oil and fat); and

– 02.02.99: rendered animal fat and waste cooking oil (wastes from the preparation and processing of animal carcasses, meat, fish and other foods of animal and vegetable origin other than from the sources listed at 20.01.25: wastes not otherwise specified).2.3.3 The input wastes in 2.3.2 are classified as animal by-products under the EU Animal By-Products Regulations (ABPR) and the UK legislation making provision for the administration and enforcement of the Regulation. However, the process of turning waste classified under EWC code 20.01.25 into quality biodiesel is not controlled under ABPR

2.3.4 The process of turning waste classified under EWC code 02.02.99 into quality biodiesel (with the exception of waste of vegetable origin where it can be demonstrated that such waste has been kept separate from waste of animal origin) is controlled under ABPR and as such must take place at premises subject to approval under Article 13 ABPR Processing must be in accordance with the requirements of Annex V Chapter III ABPR and Annex IV Regulation (EC) No 92/2005.

2.3.5 In certain instances quality biodiesel may be blended with other biodiesel made from virgin oil at the site of manufacture. Provided that the biodiesel meets the specifications defined in BS EN 14214 in the case of biodiesel intended for use as an automotive fuel, or BS EN 14213 in the case of biodiesel intended for use as a heating fuel, this is acceptable.

2.3.6 To ensure that only appropriate input materials are used in the manufacture of quality biodiesel, the producer must have and maintain procedures in the form of acceptance criteria.

2.3.7 The acceptance criteria must specify:

– the types of waste cooking oils and rendered animal fats that are accepted;

– the quantity and method of acceptance; and

– the date of delivery and source of the waste cooking oil and rendered animal fats.2.3.8 Input materials must be stored at the site of quality biodiesel production in accordance with all relevant regulatory controls, which include the Control of Pollution (Oil Storage) (England) Regulations 2001.

So I may be naive, but that was news to me…