One of the blogs on my “must read” list is Bill Slawski’s SEO by the Sea, which regularly comments on a wide variety of search related patents, both recent and in the past, obtained by Google and what they might mean…

The US patent system is completely dysfunctional, of course, acting as way of preventing innovative competition in a way that I think probably wasn’t intended by its framers, but it does provide an insight into some of the crazy bar talk ideas that Silicon Valley types thought they might just go and try out on millions of people, or perhaps already are trying out.

As an example, here are a couple of recent patents from Facebook that recently crossed my radar.

First up, USPTO 20150124107 – ASSOCIATING CAMERAS WITH USERS AND OBJECTS IN A SOCIAL NETWORKING SYSTEM:

Images uploaded by users of a social networking system are analyzed to determine signatures of cameras used to capture the images. A camera signature comprises features extracted from images that characterize the camera used for capturing the image, for example, faulty pixel positions in the camera and metadata available in files storing the images. Associations between users and cameras are inferred based on actions relating users with the cameras, for example, users uploading images, users being tagged in images captured with a camera, and the like. Associations between users of the social networking system related via cameras are inferred. These associations are used beneficially for the social networking system, for example, for recommending potential connections to a user, recommending events and groups to users, identifying multiple user accounts created by the same user, detecting fraudulent accounts, and determining affinity between users.

Which is to say: traces of the flaws in a particular camera that are passed through to each photograph are unique enough to uniquely identify that camera. (I note that academic research picked up on by Bruce Schneier demonstrated this getting on for a decade ago: Digital Cameras Have Unique Fingerprints.) So when a photo is uploaded to Facebook, Facebook can associate it with a particular camera. And by association with who’s uploading the photos, a particular camera, as identified by the camera signature baked into a photograph, can be associated with a particular person. Another form of participatory surveillance, methinks.

Note that this is different to the various camera settings that get baked into photograph metadata (you know, that “administrative” data stuff that folk would have you believe doesn’t really reveal anything about the content of a communication…). I’m not sure to what extent that data helps narrow down the identity of a particular camera, particularly when associated with other bits of info in a data mosaic, but it doesn’t take that many bits of data to uniquely identify a device. Like your web-browser’s settings, for example, that are revealed to webservers of sites you visit through browser metadata, and uniquely identify your browser. (See eg this paper from the EFF – How Unique Is Your Web Browser? [PDF] – and the associated test site: test your browser’s uniqueness.) And if your camera’s also a phone, there’ll be a wealth of other bits of metadata that let you associate camera with phone, and so on.

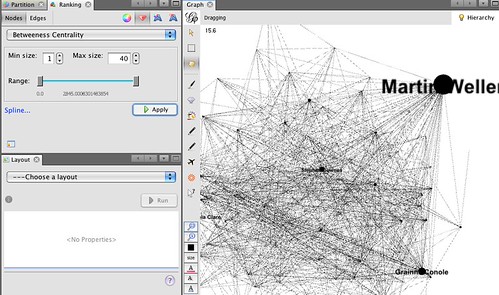

Facebook’s face recognition algorithms can also work out who’s in an image, so more relationships and associations there. If kids aren’t being taught about graph theory in school from a very young age, they should be… (So for example, here’s a nice story about what you can do with edges: SELECTION AND RANKING OF COMMENTS FOR PRESENTATION TO SOCIAL NETWORKING SYSTEM USERS. Here’s a completely impenetrable one: SYSTEMS, METHODS, AND APPARATUSES FOR IMPLEMENTING AN INTERFACE TO VIEW AND EXPLORE SOCIALLY RELEVANT CONCEPTS OF AN ENTITY GRAPH.)

Here’s another one – hinting at Facebook’s role as a future publisher:

SOCIAL NETWORKING SYSTEM DATA EXCHANGE

An online publisher provides content items such as advertisements to users. To enable publishers to provide content items to users who meet targeting criteria of the content items, an exchange server aggregates data about the users. The exchange server receives user data from two or more sources, including a social networking system and one or more other service providers. To protect the user’s privacy, the social networking system and the service providers may provide the user data to the exchange server without identifying the user. The exchange server tracks each unique user of the social networking system and the service providers using a common identifier, enabling the exchange server to aggregate the users’ data. The exchange server then applies the aggregated user data to select content items for the users, either directly or via a publisher.

I don’t really see what’s clever about this – using an ad serving engine to serve content – even though Business Insider try to talk it up (Facebook just filed a fascinating patent that could seriously hurt Google’s ad revenue). I pondered something related to this way back when, but never really followed it through: Contextual Content Server, Courtesy of Google? (2008), Contextual Content Delivery on Higher Ed Websites Using Ad Servers (2010), or Using AdServers Across Networked Organisations (2014). Note also this remark on the the University of Bedfordshire using Google Banner Ads as On-Campus Signage (2011).

(By the by, I also note that Google has a complementary service where it makes content recommendations relating to content on your own site via AdSense widgets: Google Matched Content.)

PS not totally unrelated, perhaps, a recent essay by Bruce Schneier on the need to regulate the emerging automatic face recognition industry: Automatic Face Recognition and Surveillance.