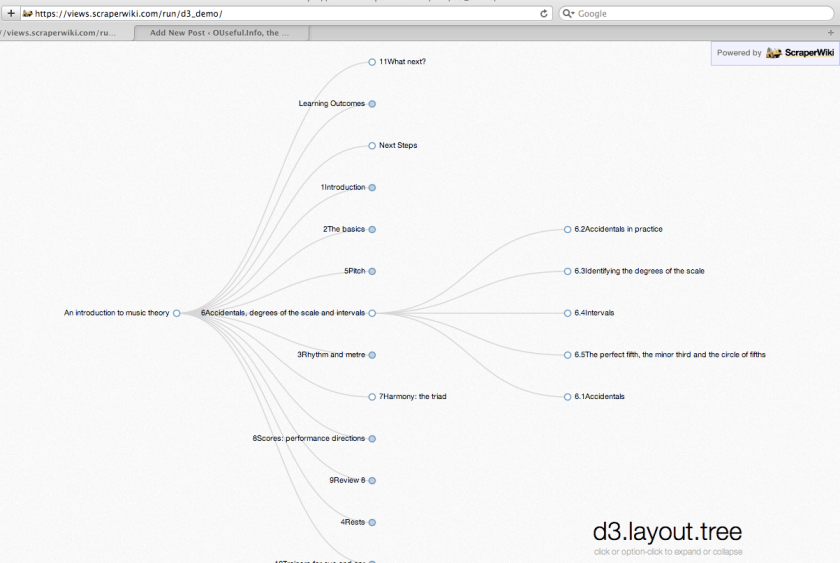

I’m not sure how to start this post, so I’ll open it with a crappy graph:

As the internet changes the way we access information – and knowledge – and the availability of information influences how we can hold people to account, I find myself increasingly drawn to the way that academia/education, the press, and that ill-defined sector I’ve lumped together as public policy development/government can influence and impact on each other.

All three sectors engage in research and investigation to different degrees, and on different timescales with different budgets. A recent report by the US Federal Communciations Commission (FCC) on Information Needs of Communities included a chapter on The Media Food Chain and the Functions of Journalism, which references the “Tom Rosensteil model” of journalism as a service providing the following eight functions: Authentication, Watch Dog, Witness, Forum Leader, Sense Making, Smart Aggregation, Empowerment, and Role Model. As I keep trying to make sense of what it is that academia actually does (in both its research and teaching/education modes), it seems to me that there is an overlap with many of these functions, although maybe with a different emphasis and supported by a different evidentiary tradition. (On my to do list is the application of these lenses to the functions of academia…unless someone has already done such a mapping? It may also be interesting to consider a view of academia via Kovach and Rosenstein’s Nine Principles of Journalism (as for example critiqued in Nieman Report Special Issue from 2001 on “The Elements of Journalism”).) (For reference, the FCC report points to Rosentein’s model via a Pew Research Centre report on the future of public relations.)

Here are a few more pieces that I think are part of this jigsaw:

- Alex Bailin QC and Edward Craven writing in the Inforrm blog about Investigative journalism and the criminal law, a review of the DPP’s recent Interim guidelines for prosecutors on assessing the public interest in cases affecting the media . I don’t think there are similar guidelines for academic researchers, but maybe that’s because academic researchers are not in the business of holding powers that be to account in the way the press does, don’t tend to engage in research in “the public interest” (is this true???) or use research methods that border on the illegal in the pursuit of matters “in the public interest”? (Would such acts get through the research ethics committees, I wonder? In what cases would/do “public interest” and “topic of academic research” intersect?)

- Academia’s relationship with the Freedom of Information Act: as public bodies, universities are open to FOI requests, as is publicly funded academic research (e.g. see JISC’s Freedom of Information and research data: Questions and answers; see also this Universities UK submission to inform the post-legislative scrutiny of the Freedom of Information Act from February 2012, and RIN: Freedom of information: helping researchers meet the challenge). But FOI can also be used as research tool in its own right (for example, Freedom of information – a tool for researchers). And if FOIs are used for research, does the act of asking in some sense hold the body to which the request was made to account for something?

- Several years ago, there was a shift in science communication from the notion of “Public Understanding of Science” to “Public Engagement with Science”, in particular, the “upstreaming” of engagement (Demos: See-through science: Why public engagement needs to move upstream), summarised by the OU’s Richard Holliman as follows:

What is upstream public engagement with science (and technology; PEST)?

A concept developed in response to perceived limitations of the ‘public understanding of science’. Upstream PEST, which was developed in response to detailed research studies, has been used in a number of different ways in recent years. It is generally understood to be based on a more sophisticated model of science communication – one that acknowledges that communication does (and should) flow in more than one direction, i.e. not just from scientists to the public, but also from various publics to scientists, and from publics to publics, and from various other stakeholders to publics, scientists, and so on.Those who advocate an upstream PEST approach argue that useful and relevant expertise is distributed among different publics, stakeholders and scientific experts. Expertise is more distributed, nuanced and contingent, particularly in relation to frontier science where complexity, uncertainty and controversy abound. It follows that for (upstream) PEST approaches to be successful, a range of publics should be given early (in the case of emerging technoscience, this should be upstream), and then regular, routine opportunities to contribute to the governance of science.

What’s important here, I think, is the notion that governance of research effort, and at one or two steps remove, the development of public research funding programmes, is influenced by conversations between academia and their publics.

- Notwithstanding the current debate around the commercial business models based around the publication of academic research papers (e.g. David Willetts on Open, free access to academic research? and the Working Group on Expanding Access to Published Research Findings), it’s worth noting that “there’s more” when it comes to the sort of commons offered by publications that are recognised as academic publications. As noted above, academic research may tend to matters “academic” rather than “in the public interest”, although we might argue that it is in the public interest to promote claims supported by the higher standards (?) of truth that are expected in the academic tradition, and as resulting from the scientific method, above conjecture, personal opinion and other less defensible routes to “knowledge”. In the Defamation Bill recently introduced to the House of Commons, I note that “Peer-reviewed statement[s] in scientific or academic journal etc” are given special treatment:

6 Peer-reviewed statement in scientific or academic journal etc

(1) The publication of a statement in a scientific or academic journal is privileged if the following conditions are met.

(2) The first condition is that the statement relates to a scientific or academic matter.

(3) The second condition is that before the statement was published in the journal an independent review of the statement’s scientific or academic merit was carried out by—

(a) the editor of the journal, and

(b) one or more persons with expertise in the scientific or academic matter concerned.

(4) Where the publication of a statement in a scientific or academic journal is privileged by virtue of subsection (1), the publication in the same journal of any assessment of the statement’s scientific or academic merit is also privileged if —

(a) the assessment was written by one or more of the persons who carried out the independent review of the statement; and

(b) the assessment was written in the course of that review.

(5) Where the publication of a statement or assessment is privileged by virtue of this section, the publication of a fair and accurate copy of, extract from or summary of the statement or assessment is also privileged.

(6) A publication is not privileged by virtue of this section if it is shown to be made with malice.

(7) Nothing in this section is to be construed—

(a) as protecting the publication of matter the publication of which is prohibited by law;

(b) as limiting any privilege subsisting apart from this section.

(8) The reference in subsection (3)(a) to “the editor of the journal” is to be read, in the case of a journal with more than one editor, as a reference to the editor or editors who were responsible for deciding to publish the statement concerned.Note that for the purposes of the Bill, ““statement” means words, pictures, visual images, gestures or any other method of signifying meaning.

As far as the academic/press/policy confusion goes, it is worth noting here that academic communications are given privileged treatment. I’m not sure what defines a communication as academic though?

- As the previous item suggests, an important role played by academics engaged in research is their engagement with the process of peer review. To what extent does this – or should it – also cover peer review of policy, either formally, or via an open, opt-in, peer review process? The Government Code of Practice on Consultation starts off with the following criterion:

When to consult

Formal consultation should take place at a stage when there is scope to influence the policy outcome.

1.1 Formal, written, public consultation will often be an important stage in the policymaking process. Consultation makes preliminary analysis available for public scrutiny and allows additional evidence to be sought from a range of interested parties so as to inform the development of the policy or its implementation.

1.2 It is important that consultation takes place when the Government is ready to put sufficient information into the public domain to enable an effective and informed dialogue on the issues being consulted on. But equally, there is no point in consulting when everything is already settled. The consultation exercise should be scheduled as early as possible in the project plan as these factors allow.

1.3 When the Government is making information available to stakeholders rather than seeking views or evidence to influence policy, e.g. communicating a policy decision or clarifying an issue, this should not be labelled as a consultation and is therefore not in the scope of this Code. Moreover, informal consultation of interested parties, outside the scope of this Code, is sometimes an option and there is separate guidance on this.

1.4 It will often be necessary to engage in an informal dialogue with stakeholders prior to a formal consultation to obtain initial evidence and to gain an understanding of the issues that will need to be raised in the formal consultation. These informal dialogues are also outside the scope of this code.

1.5 Over the course of the development of some policies, the Government may decide that more than one formal consultation exercise is appropriate. When further consultation is a more detailed look at specific elements of the policy, a decision will need to be taken regarding the scale of these additional consultative activities. In deciding how to carry out such re-consultation, the department will need to weigh up the level of interest expressed by consultees in the initial exercise and the burden that running several consultation exercises will place on consultees and any potential delay in implementing the policy. In most cases where additional exercises are appropriate, consultation on a more limited scale will be more appropriate. In these cases this Code need not be observed but may provide useful guidance.

…Note also criterion 5:

The burden of consultation

Keeping the burden of consultation to a minimum is essential if consultations are to be effective and if consultees’ buy-in to the process is to be obtained.

5.1 When preparing a consultation exercise it is important to consider carefully how the burden of consultation can be minimised. While interested parties may welcome the opportunity to contribute their views or evidence, they will not welcome being asked the same questions time and time again. If the Government has previously obtained relevant information from the same audience, consideration should be given as to whether this information could be reused to inform the policymaking process, e.g. is the information still relevant and were all interested groups canvassed? Details of how any such information was gained should be clearly stated so that consultees can comment on the existing information or contribute further to this evidence-base.

5.2 If some of the information that the Government is looking for is already in the public domain through market research, surveys, position papers, etc., it should be considered how this can be used to inform the consultation exercise and thereby reduce the burden of consultation.

5.3 In the planning phase, policy teams should speak to their Consultation Coordinator and other policy teams with an interest in similar sectors in order to look for opportunities for joining up work so as to minimise the burden of consultations aimed at the same groups.

5.4 Consultation exercises that allow consultees to answer questions directly online can help reduce the burden of consultation for those with the technology to participate. However, the bureaucracy involved in registering (e.g. to obtain a username and password) should be kept to a minimum.

5.5 Formal consultation should not be entered into lightly. Departmental Consultation Coordinators and, most importantly, potential consultees will often be happy to advise about the need to carry out a formal consultation exercise and acceptable alternatives to a formal exercise.To a certain extent, we might consider this a model for supporting upstream engagement with policy development. (The reality seems to me, more often than not, that consultation exercises are actually pre-emptive PR strikes that allow arguments against an already decided upon policy to be defused before the formal announcement of the policy in the hours after (or even before…) the consultation closes, notwithstanding point 1.2;-)

We might also see consultation exercises, and requests for comments on reports, as an opportunity for academia to engage in open peer review of policy developments, as well as a ‘pathway to impact’ (eg Research Councils UK – Pathways to Impact. Hmmm…the Pathways to impact route map may be handy for putting together a slightly more detailed version of the crappy graph…;-) As I’ve posted previously (e.g. News, Courses and Scrutiny), there may also be opportunities for using consultations as a hook, or frame, for educational materials in context, where the context is (evidence based) policy development and the consultation around it.

So – more pieces… Now I just need to start putting them together…